Products Overview

Accelerator IP for

AI Designs

AI Accelerator IP That Scales from Edge Devices to Data Centers

The design of AI systems requires a careful balance of performance, power consumption, area (PPA), and latency. Origin Evolution™ NPU IP effectively manages the unique workload and memory requirements of applications that range from edge devices to automotive solutions and data centers. It is customizable for specific use cases and optimized to deliver the best PPA while handling various models, including large language models (LLMs), Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and others.

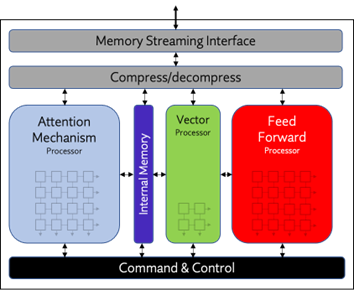

Optimized Architecture for Your Trained Networks

Origin Evolution offers unparalleled accuracy and predictable performance without requiring any hardware-specific optimizations or modifications to your trained model. Its patented packet-based execution architecture delivers single-core performance of up to 128 TFLOPS and maintains utilization rates of 70-90%. These metrics are measured on silicon while running typical AI workloads like Llama and YOLO. This outstanding performance and efficiency allow users to execute LLM, CNN, and other AI models with significantly lower power consumption compared to alternative solutions.

Silicon-proven and third-party tested, Expedera’s architecture is extremely power-efficient, achieving 18 TOPS/W. Origin Evolution NPUs offer deterministic performance, the smallest memory footprint, and are fully scalable. This makes them perfect for edge solutions with little or no DRAM bandwidth or high-performance applications such as autonomous driving. ASIL-B readiness-certified, Expedera offers the ideal solution for engineers looking for a single NPU architecture that easily runs LLM, CNN, RNN, and other network types while maintaining optimal processing performance, power, and area.

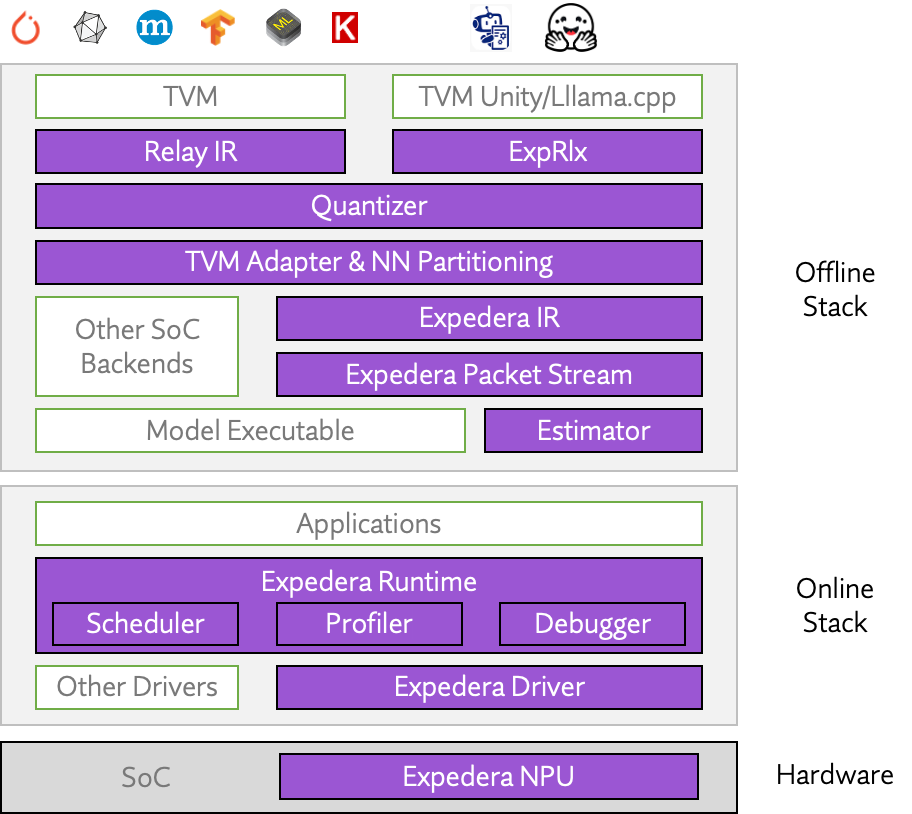

Full Software Stack

Expedera’s Origin Evolution IP platform includes a full TVM- and Relax-based software stack that enables developers to work efficiently as they deploy their networks on the target hardware—supporting popular frameworks such as HuggingFace, Llama.cpp, PyTorch, Onnx, TensorFlow, TVM, and others. Users can deploy their trained models as-is; no retraining or accuracy reductions required. The software stack automatically handles tasks such as packetization and debugging while providing user-friendly options such as mixed precision (integer and floating point) quantization—using your tools or ours—custom layer and network support, and multi-job APIs.

Origin Line of Products

Origin E1

The Origin E1 processing cores are individually optimized for a subset of neural networks commonly used in home appliances, edge nodes, and other small consumer devices. The E1 LittleNPU supports always-sensing cameras found in smartphones, smart doorbells, and security cameras.

Origin E2

The Origin E2 is designed for power-sensitive on-chip applications that require no off-chip memory. It is suitable for low power applications such as mobile phones and edge nodes, and like all Expedera NPUs, is tunable to specific workloads.

Origin E6

Origin E6, optimized to balance power and performance, utilizes SoC cache or DRAM access during runtime and supports advanced system memory management. Supporting multiple jobs, the E6 runs a wide range of AI models in smartphones, tablets, edge servers, and others.

Origin E8

Origin E8 is designed for high-performance applications required by autonomous vehicles/ADAS and datacenters. It offers superior TOPS performance while dramatically reducing DRAM requirements and system BOM costs, as well as enabling multi-job support. Even at 128 TOPS, with its low power consumption, the Origin E8 is a good fit for deployments in passive cooling environments.

TimbreAI T3

TimbreAI T3 is an ultra-low power Artificial Intelligence (AI) inference engine designed for noise reduction uses cases in power-constrained devices such as headsets. TimbreAI requires no external memory access, saving system power while increasing performance and reducing chip size.

Products

Origin E1

Origin E1 neural engines are optimized for networks commonly used in always-on applications in home appliances, smartphones, and edge nodes that require about 1 TOPS performance. The E1 LittleNPU processors are further streamlined, making them ideal for the most cost- and area-sensitive applications.

Origin E2

Origin E2 NPU cores are power- and area-optimized to save system power in smartphones, edge nodes, and other consumer and industrial devices. Through careful attention to processor utilization and memory requirements, E2 NPUs deliver optimal performance with minimal latency. The E2 is highly configurable, offering 1 to 20 TOPS performance supporting common RNN, LSTM, CNN, DNN, and other network types.

Origin E6

Origin E6 NPU IP cores are performance-optimized for smartphones, AR/VR headsets, and other device applications that require image transformer, stable diffusion and point cloud-related AI. Through careful attention to processor utilization and external memory usage, E6 NPUs improve power efficiency and reduce latency to an absolute minimum. They offer single-core performance from 16 to 32 TOPS.

Origin E8

Designed for performance-intensive applications such as automotive/ADAS and data centers, Origin E8 NPU IP cores excel at complex AI tasks, including computer vision, LLMs, warping, point cloud, grid sample, image classification, and object detection. They offer single-core performance ranging from 32 to 128 TOPS.

TimbreAI T3

TimbreAI T3 is an ultra-low power Artificial Intelligence (AI) Inference engine designed for noise reduction use cases in power-constrained devices such as headsets. TimbreAI requires no external memory access, saving system power while increasing performance and reducing chip size.

Origin Evolution for Automotive

Designed for performance-intensive automotive/ADAS applications, Origin Evolution for Automotive NPU IP cores excel at complex AI tasks, including computer vision, LLMs, warping, point cloud, grid sampling, image classification, and object detection. Single-core performance scales to 96 TFLOPS in a single core, with multi-core performance to PetaFLOPs.

Origin Evolution for Data Center

Able to support a large number and varied mix of neural networks, Origin Evolution for Data Center offers the most power- and performance-friendly AI inference solution. Its NPU IP scales to 128 TFLOPS in a single core, with multi-core performance to PetaFLOPs.

Origin Evolution for Edge

With out-of-the-box compatibility with popular LLM and CNN networks, Origin Evolution for Edge offers optimal AI performance across various networks and representations. Its highly optimized NPU IP solutions are customizable and scale to 32 TFLOPS in a single core to address the most advanced edge inference needs.

Origin Evolution for Mobile

Supporting Multimodal Large Language Models (MLLMS) (LLMs), Origin Evolution for Mobile offers smartphone makers highly efficient AI inference solutions that enable the advanced features customers want. Its field-proven NPU IP solutions are customizable and scale to 64 TFLOPS in a single core.

Download our White Papers